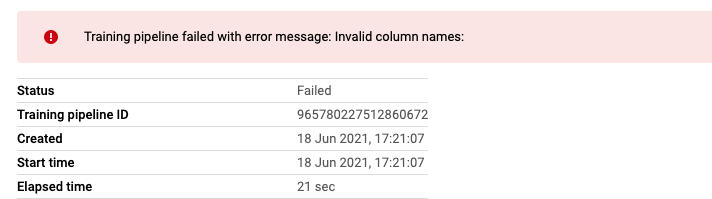

By following the document, I tried to deploy the management cluster of Kubeflow. But after running make apply-cluster it reported:

The management cluster name "kubeflow-mgmt" is valid. # Delete the directory so any resources that have been removed # from the manifests will be pruned rm -rf build/cluster mkdir -p build/cluster kustomize build ./cluster -o build/cluster # Create the cluster anthoscli apply -f build/cluster I0723 14:53:19.329785 24546 main.go:230] reconcile serviceusage.cnrm.cloud.google.com/Service container.googleapis.com I0723 14:53:23.236897 24546 main.go:230] reconcile container.cnrm.cloud.google.com/ContainerCluster kubeflow-mgmt Unexpected error: error reconciling objects: error reconciling ContainerCluster:gcp-wow-rwds-ai-mlchapter-dev/kubeflow-mgmt: error creating GKE cluster kubeflow-mgmt: googleapi: Error 400: Project "gcp-wow-rwds-ai-mlchapter-dev" has no network named "default". make: *** [apply-cluster] Error 1

The reason for this error is that Kubeflow could only use the network with the name “default” in GCP as its VPC. This issue is still open and has been pointed to anthos.

Workaround: Create a new GKE cluster manually, and set MGMT_NAME to the existed cluster name

export MGMT_NAME=kubeflow-exp

Then the make apply-cluster would work properly.