After discussed with technical support guys from AWS, I get more information about how to use all the service of AWS to build a streaming ETL architecture, step by step.

The main architecture could be described by the diagram below:

AWS S3 is the de-facto data lake. All the data, no matter from AWS RDS or AWS Dynamo or other custom ways, could be written into AWS S3 by using some specific format, such as Apache Parquet or Apache ORC (CSV format is not recommend because it’s not suitable for data scan and data compression). Then, data engineers could use AWS Glue to extract the data from AWS S3, transform them (using PySpark or something like it), and load them into AWS Redshift.

For some frequently-used data, they could also be put in AWS Redshift for optimised query. When it is needed to join tables from both AWS S3 and AWS Redshift, we could also use AWS Redshift Spectrum.

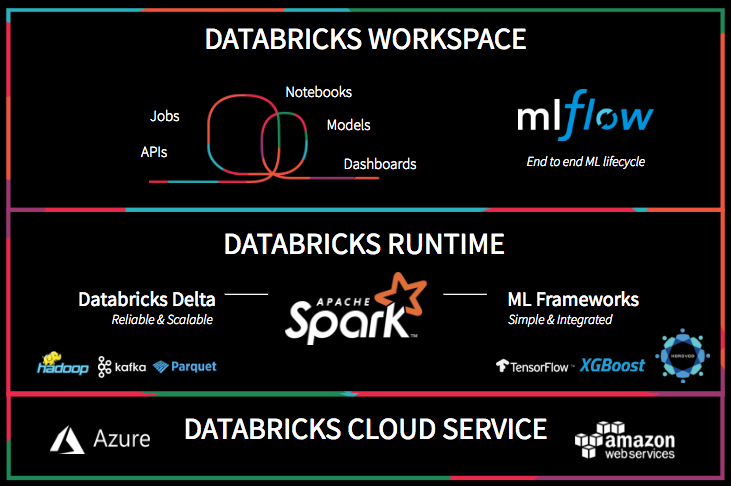

BTW, I also joined a workshop about DataBricks’ new Unified Data Analytics and Machine Learning Platform which is built on AWS. It contains

1. Delta Lake for data storage and schema enforcement.

2. Notebook to let user directly write code and run them to process and analyze data by Apache Spark. Just like Jupyter Notebook.

3. MLFlow use above data to train machine learning model.

I used Apache Spark for learning about 4 years ago. At that time, I even need to build java/scala package by myself, upload and run it. Debugging is tedious because I can only scan logs of CLI again and again to find mistakes in code. But now, Databricks give a much more convenient solution for the data scientists and developers.

Someone who is interesting in this platform could try free edition of it https://databricks.com/try-databricks